Why Bias Is Rising Across Every Major AI Model

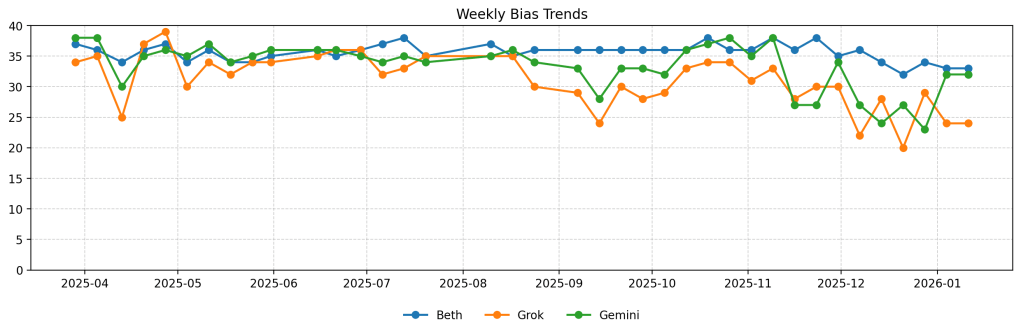

For months, the Weekly Bias Monitor has tracked how three leading AI systems—ChatGPT (Beth), Grok, and Gemini—handle politically and culturally charged news. The premise has been simple: ask the same questions, enforce the same rules, and score each model on Bias, Accuracy, Tone, and Transparency.

This week’s results mark a clear inflection point.

All three models declined.

Not because one side of the political spectrum dominated the news cycle.

Not because the questions were skewed.

But because the structure of the news itself shifted in ways that make neutrality harder to sustain.

This Week’s Scores (out of 40)

- Beth (ChatGPT): 26

- Grok (xAI): 24

- Gemini (Google): 29

All three remain in the Adequate range—but the direction matters more than the band. This week confirms a broader pattern: bias is no longer primarily ideological. It is structural.

The Core Issue: Neutral Ground Is Shrinking

Bias is not increasing because AI models are “choosing sides.”

It is increasing because the shared factual ground those sides once stood on is eroding.

Across all five topics examined this week—U.S.–Iran tensions, immigration enforcement, tech lobbying, the World Economic Forum, and AI regulation—the same pressures were present:

- Fewer independently verifiable facts

- More narrative-driven reporting

- Increased reliance on unnamed officials, advocacy groups, and institutional interpretation

When coverage becomes interpretive rather than evidentiary, AI systems face an unavoidable choice: repeat dominant narratives, or acknowledge uncertainty. Either path introduces bias.

How Each Model Reacted to the Shift

The divergence this week wasn’t ideological. It was methodological.

ChatGPT (Beth): Synthesis Under Strain

Beth maintained strong structure and clear side-by-side framing, but attempted to synthesize across uneven source material. Where reporting was thin or contradictory, it filled gaps rather than stopping short.

That instinct—helpful in normal conditions—introduced subtle bias through language selection and implied consensus. The model didn’t advocate, but it assumed coherence where none fully existed.

Grok: Transparency Without Restraint

Grok was unusually candid about coverage gaps, explicitly noting when qualifying reporting could not be found. That honesty improved transparency—but it still proceeded to answer.

The result was speculative framing and ideological labeling without sufficient evidentiary ballast. Bias emerged not from intent, but from continuing analysis after the data ran out.

Gemini: Institutional Gravity

Gemini produced the most polished responses, leaning heavily on think tanks, legal analyses, and institutional voices. This preserved accuracy and tone, but came at a cost.

Media diversity suffered. The result was a form of elite consensus bias—not partisan, but detached from how real-world outlets are diverging in coverage and emphasis.

Guardrails vs. Reality

Another force was visible this week: constraint-driven bias.

On topics involving military escalation, immigration enforcement, and AI-generated sexual content, models were navigating not just facts, but risk tolerance. Safety rules and moderation constraints subtly shaped phrasing, softened claims, and favored already-normalized narratives.

This creates friction. When some outlets report bluntly and others hedge, AI summaries gravitate toward the safest common denominator—introducing distortion even when intentions are neutral.

What This Week Reveals

This week demonstrates that:

- Bias increases when evidence thins

- Models default to their training instincts under uncertainty

- Institutional and safety pressures shape what “neutral” sounds like

- Interpretation replaces verification when facts are contested

None of the models were deceptive.

None were overtly partisan.

But none were fully neutral either—because neutrality now requires acknowledging uncertainty, not merely balancing sides.

Why This Matters

AI bias is no longer best understood as left versus right.

It is a signal-to-noise problem.

As media ecosystems fragment and authority decentralizes, AI systems are being asked to do something humans are already struggling with: distinguish truth from framing in real time.

This week marks a significant shift. When reality itself becomes unstable, AI does not become more objective—it becomes more interpretive.

And interpretation is where bias lives.

The Weekly Bias Monitor will continue under the same rules, with the same scrutiny. Because the only way to understand AI bias is to observe it as conditions change—not to assume it disappears when intentions are good.

Leave a comment